A new Android app!

At work a couple of weeks ago two of my co-workers were inventorying a large quantity of stock that had just arrived. They were hoping to scan the barcodes for each item into a simple CSV file. Their first though was obviously "there's an app for that". Turns out there wasn't. There are hundreds of barcode-scanning and inventory apps available, but none that simple scanned to a CSV list of barcodes, then allowed that CSV data to be emailed/saved etc.

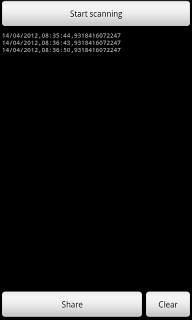

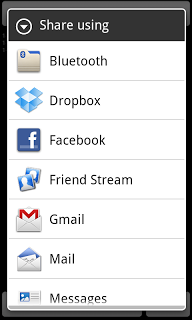

So yesterday, after 4 hours work, I can now say there is such an app. Stock Scanner isn't pretty, nor feature-packed, but it exactly fulfils the above requirement.

Stock Scanner is available in a limited-scans free version , or a very cheap paid version , on